The implementation of Single Sign On (SSO) is a standard requirement for enterprises. SSO not only makes the login processes more comfortable for user, it as well allows companies to centrally control the access to applications. To enable central control it is required that SSO is the only option for users to access the application.

In Salesforce a user with SSO enabled has two options to access the system: (1) Using Single Sign On and (2) Username and Password.

To disable access to Salesforce additional features must be configured:

Disable login.salesforce.com (not enough)

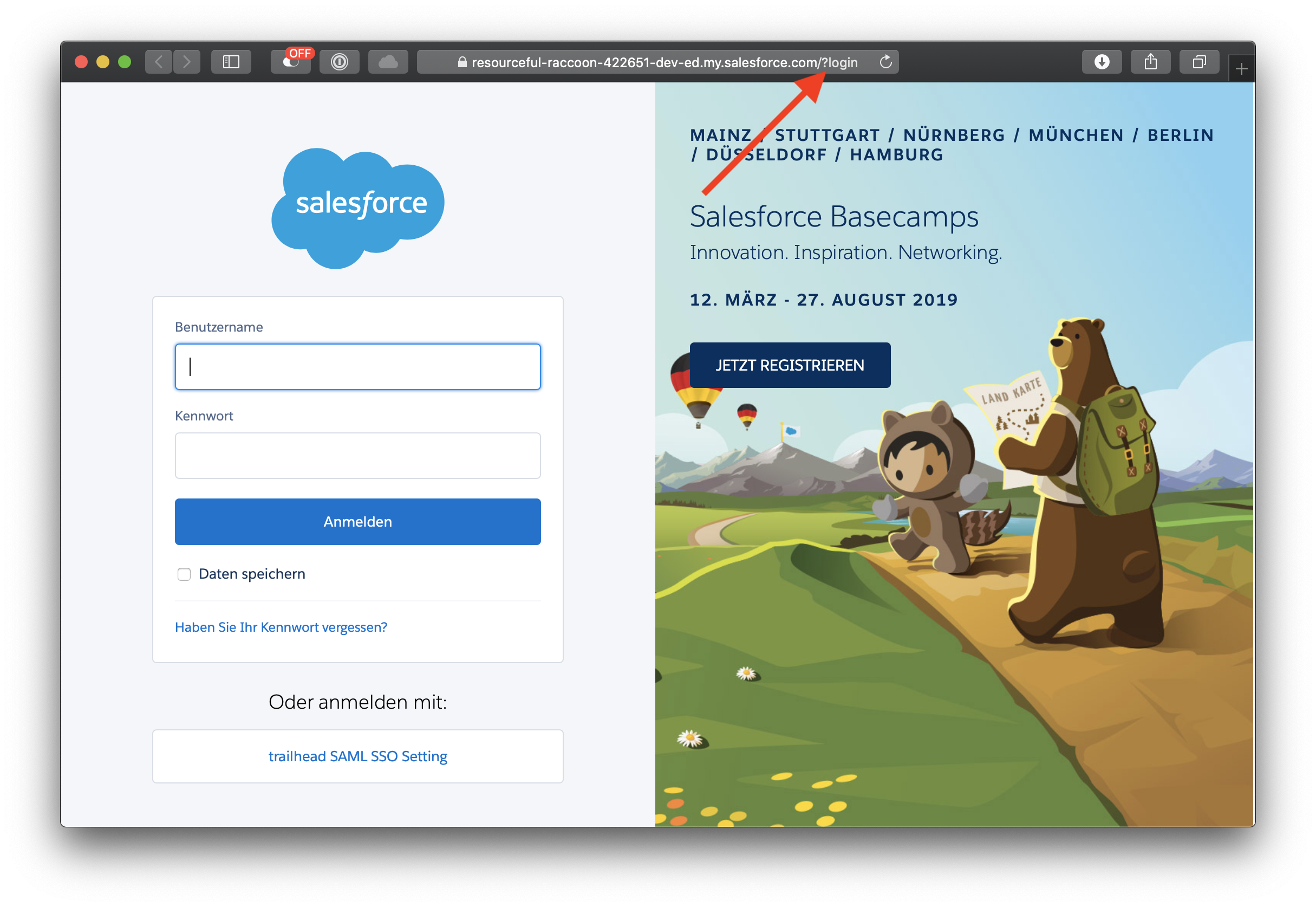

The first option is to implement Salesforce My Domain, use the Identity Provider as the only option for login via My Domain and disallow users to login from login.salesforce.com.

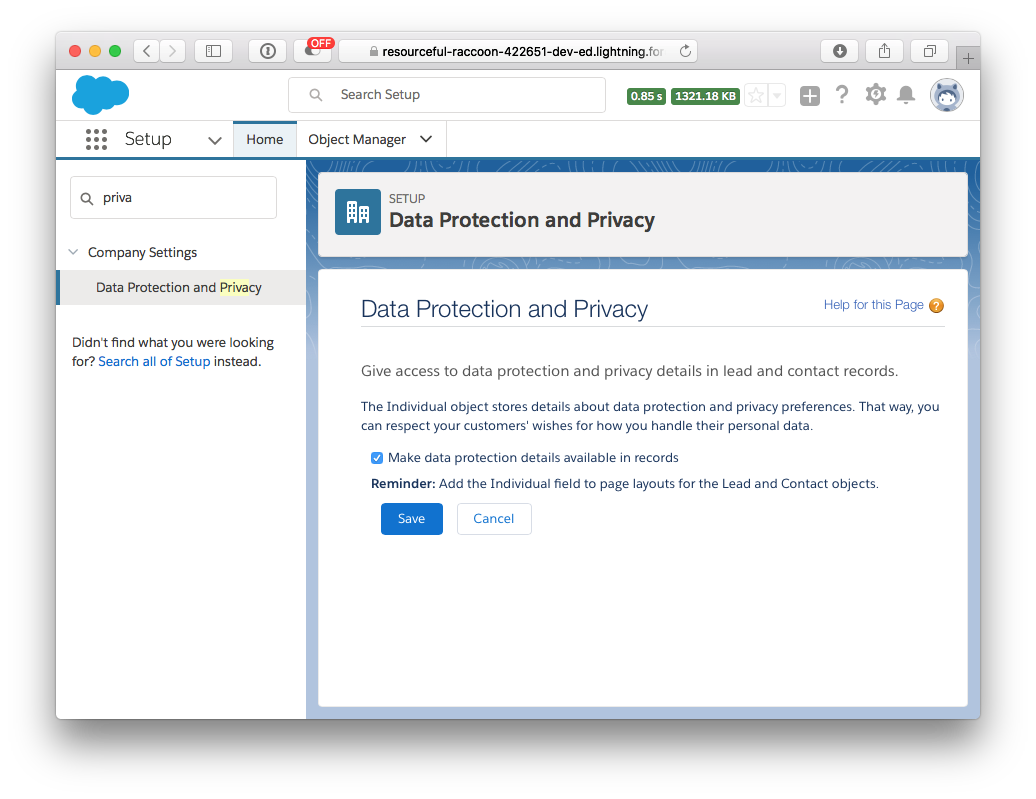

This option can be circumvented by users. They can still use <mydomain>.my.salesforce.com/?login to access the application and enter there username and password.

User Setting “Is Single Sign-On Enabled”

One way to disable login via Username and Password is the User Setting “Is Single Sign-On Enabled”. The setting comes as part of “Delegated Authentication”.

The documentation of the user feature is minimal. Salesforce is referring to it only in a handful of articles. It seems like the feature was intended to route the username and password after entering it to the “Delegated Authentication Web Service” and not to disable other login mechanisms.

If the user’s profile has the Is Single Sign-On Enabled user permission, then Salesforce does not validate the username and password. Instead, a Web services call is made to the user’s organization, asking it to validate the username and password.

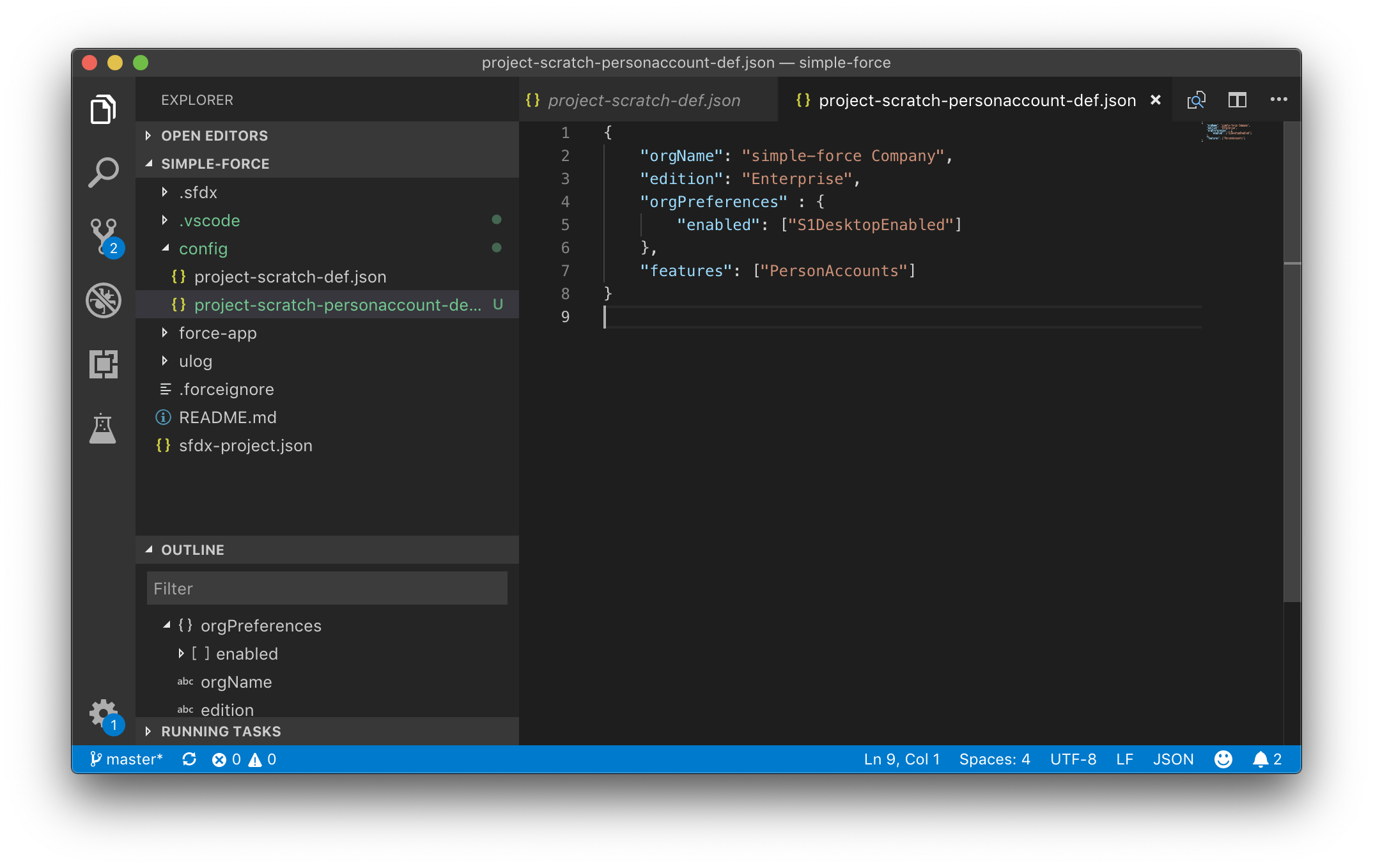

Single Sign on for Desktop and Mobile

The setting can be enabled on Profile and Permission Set Level without entering the address of the delegated authentication service. This results in the side effect that users cannot login with Username and Password. Users that try to login with their username and password will see the following error message:

We can’t log you in because you’re only allowed to use single sign-on. For help, contact your Salesforce administrator

Error Message after entering username and password

This behavior has been documented by Ashish Agarawal and multiple others on StackExchange. However, the feature is not officially documented and can be removed at anytime. I would personally not recommend to go this route.

Transaction Security Policy – Salesforce Event Monitoring

Since 2016 Salesforce Orgs can be protected with Salesforce Shield. As part of Shield specific Transaction Security Policies can be enforced. Based on policies different transactions e.g. Logins or Report Exports can be prohibited. The setup of a new Transaction Policy is done in 3 steps:

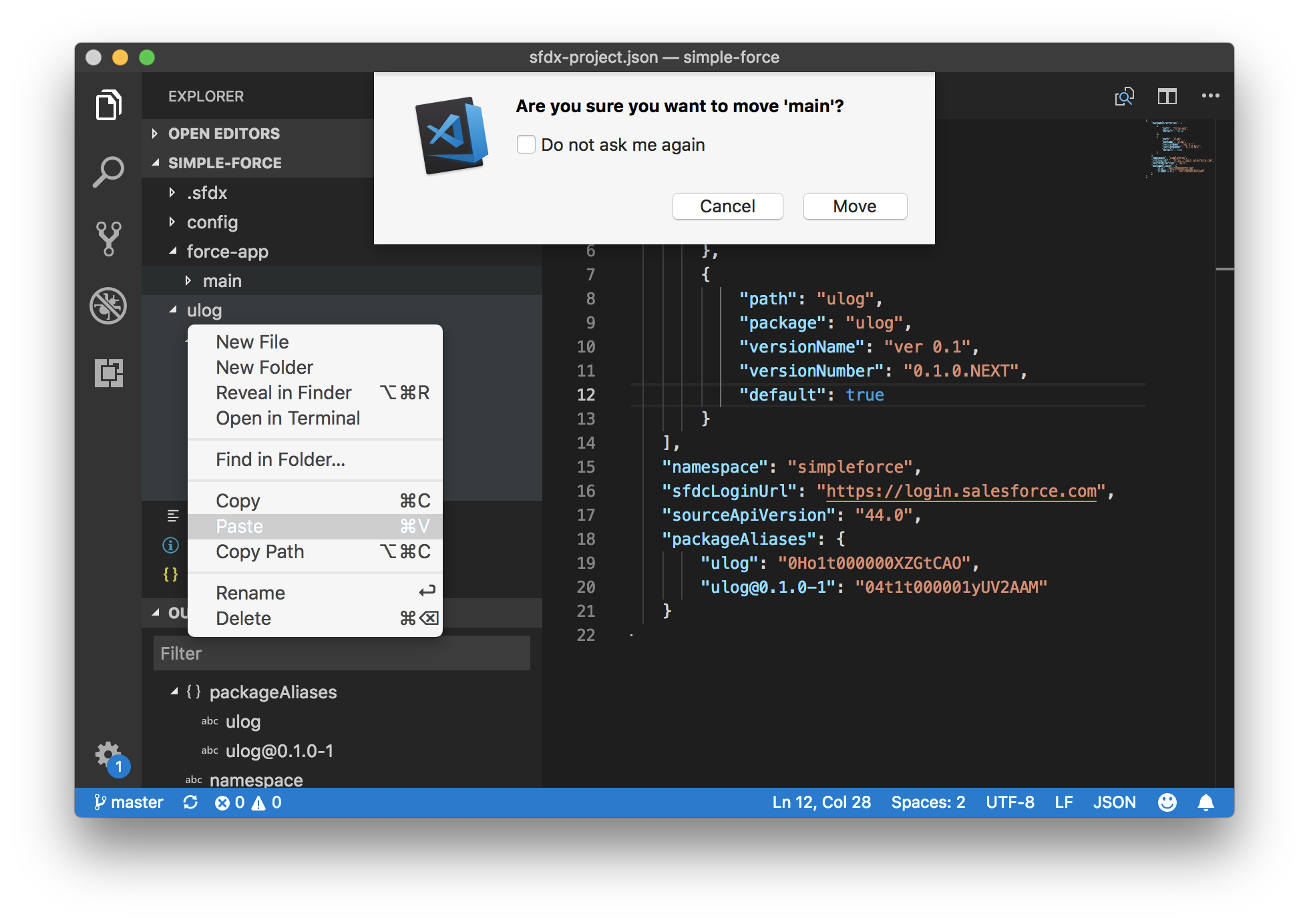

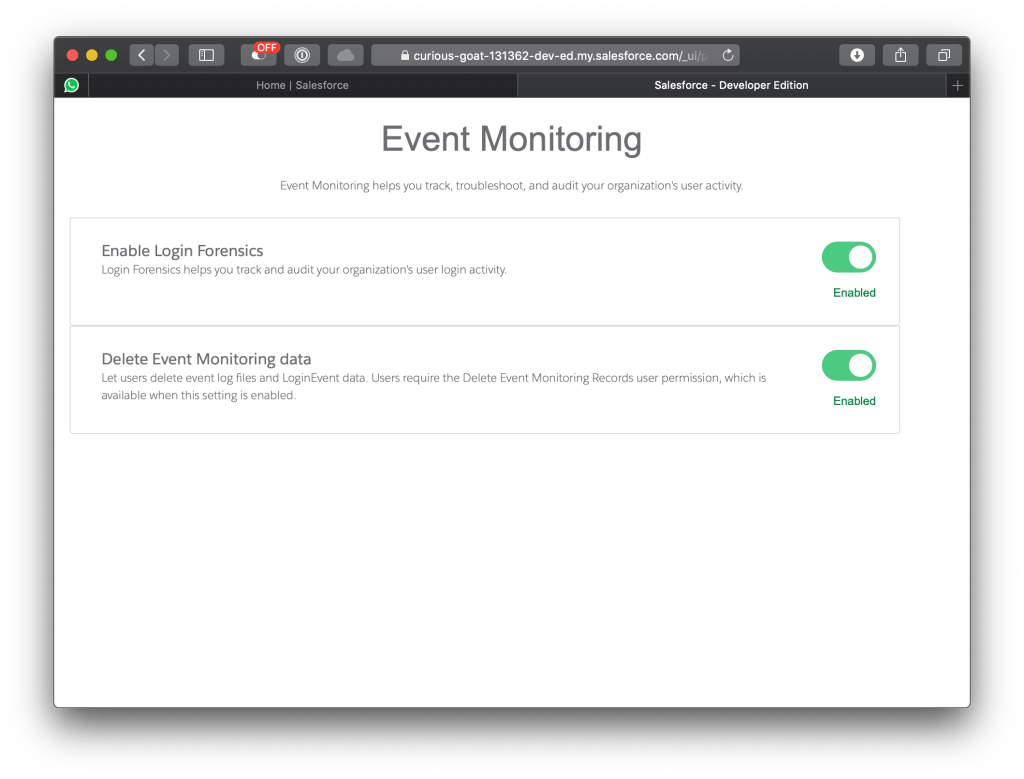

Step 1: Setup Event Monitoring

Transactional Policies are based on Salesforce Event Monitoring. After the feature licenses for Shield is activated, event monitoring can be activated:

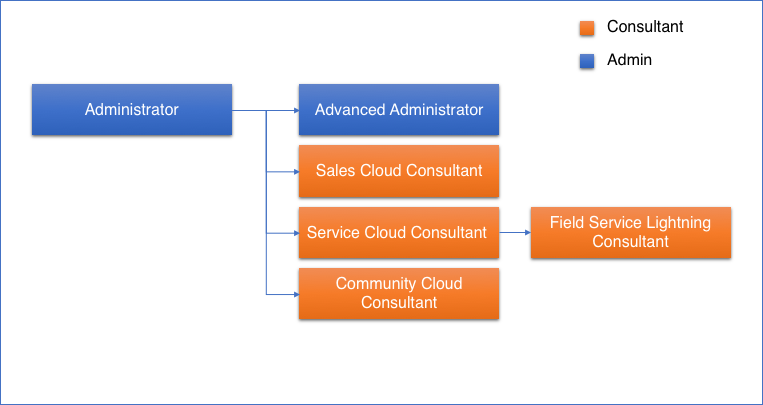

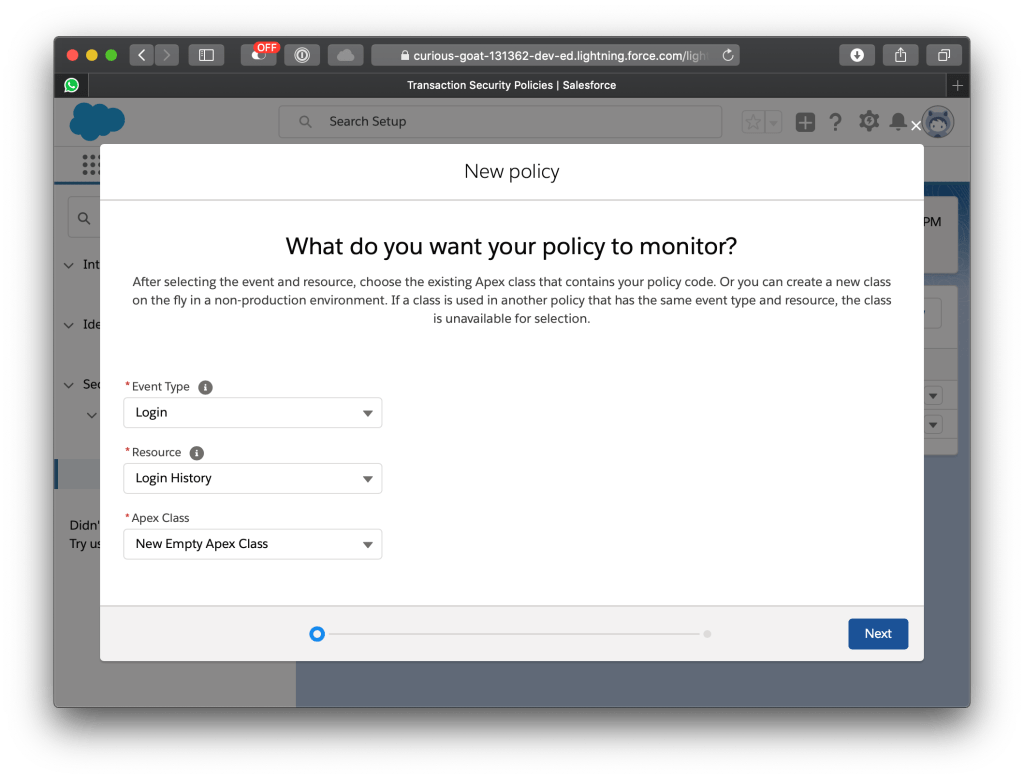

Step 2: Create a new Policy

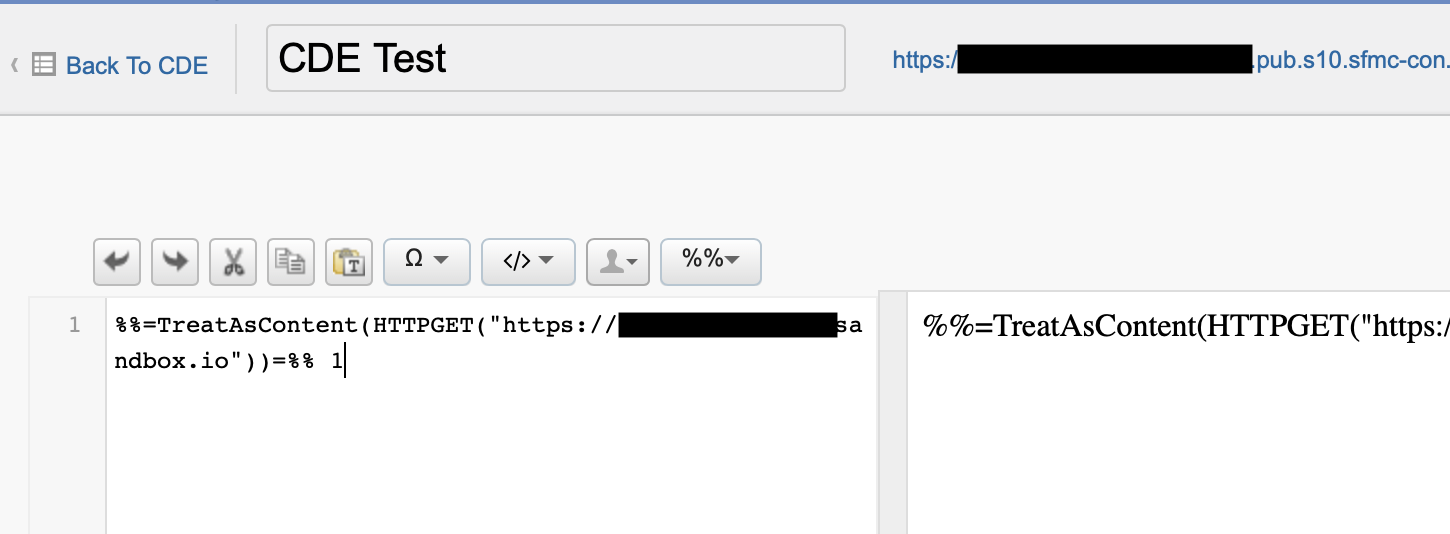

Policies are based on three components: (1) a listener, (2) Condition, (3) Reaction. In the first step of the setup the event type must be selected (in this example “Login”) and the corresponding Resource “Login History”.

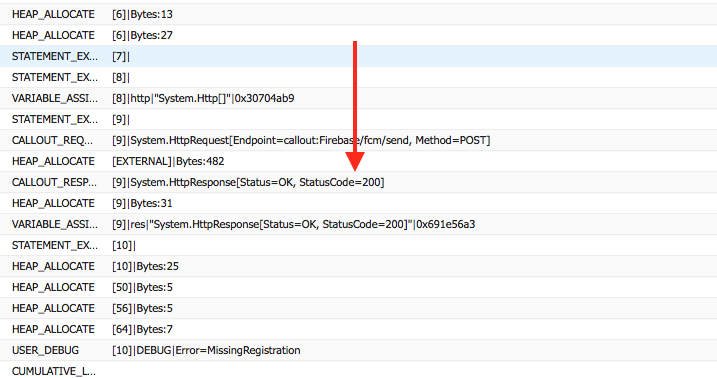

For each event defined in the listener a short piece of code is executed. The “Condition” detects if the policy is violated and triggers the reaction.

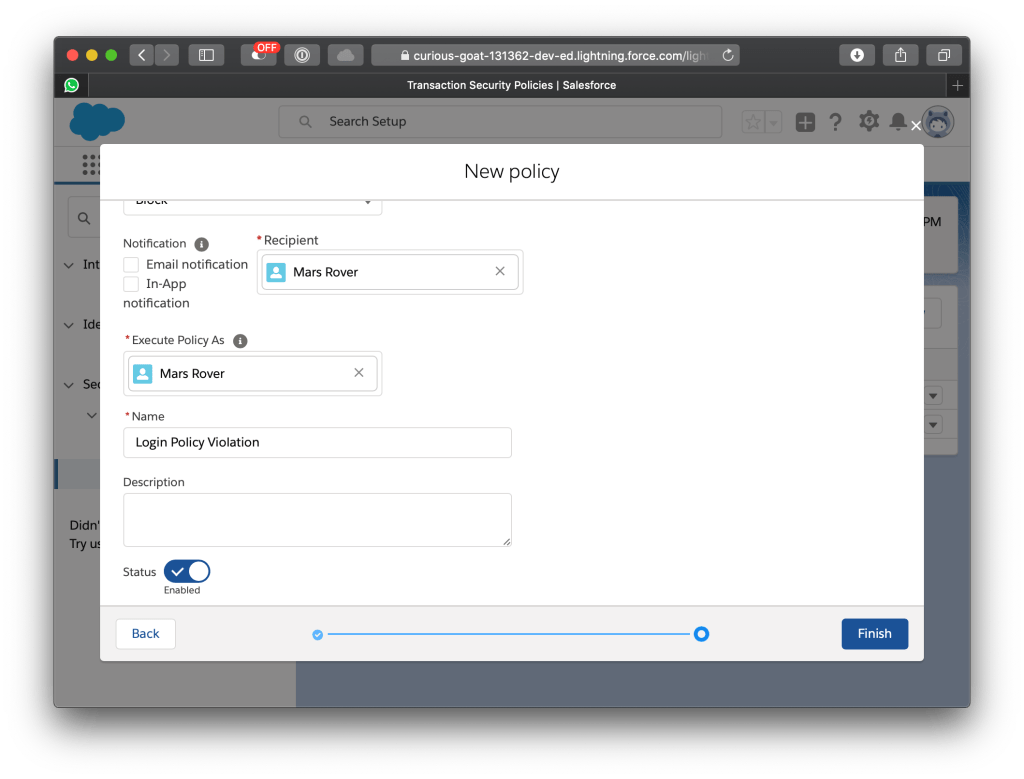

In the 2nd screen the reaction of a policy violation is configured. In this case the login is blocked and the administrator is informed via Email.

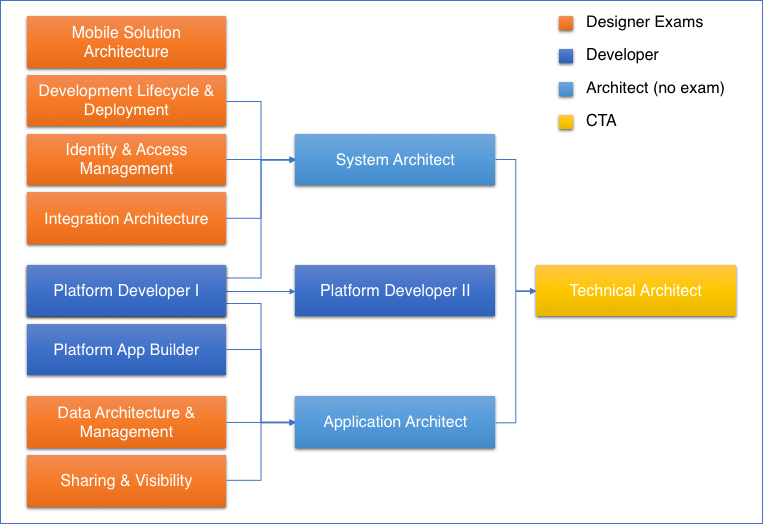

Step 3: Write a Policy Condition

In order to restrict the login from any other identity provider that the one that has been selected, I implemented a short APEX class. The class checks for each login if the right Authentication Service is used.

global class LoginPolicyViolationPolicyCondition implements

TxnSecurity.PolicyCondition {

private static final String ALLOWED_AUTHENTICATION_SERVICE_ID = 'XXXX';

public boolean evaluate(TxnSecurity.Event e) {

String loginHistoryId = e.data.get('LoginHistoryId');

LoginHistory l = [ SELECT Id, AuthenticationServiceId FROM LoginHistory WHERE ID =: loginHistoryId ];

return ALLOWED_AUTHENTICATION_SERVICE_ID !=

String.valueOf(LoginHistory.AuthenticationServiceId);

}

}

Finally

Salesforce Shield is the out of the box way to detect violations of policies and react accordingly. In comparison to the “Delegated Authentication option” the policies are designed to inform administrators about cases of violation.